How to Use AI Safely in 2026: Ethical AI Tips for Beginners

Using AI is now part of everyday life, from writing and research to design, customer support, and coding. That is why understanding exactly how to use AI safely in 2026 has become a basic digital survival skill, just like recognizing phishing emails or managing strong passwords. This guide is written for beginners, creators, students, and small businesses who want practical, human‑centric advice on using AI tools without compromising ethics, privacy, or trust.

AI can save enormous amounts of time, unlock creativity, and automate boring tasks, but unsafe use can leak data, spread misinformation, or amplify hidden bias. When people learn how to use AI tools safely in 2026, they are not only protecting themselves — they are also protecting their audiences, clients, and collaborators from preventable harm. This article blends explanations, tables, and checklists so that ethical AI tips for beginners feel simple and repeatable instead of abstract or technical.

Throughout the article, you will see real‑world angles: how to choose safe tools, what to avoid in prompts, how to verify outputs, and how to build daily routines that make safe behavior automatic. Learning how to use AI safely in 2026 now will keep you future‑ready as new tools, rules, and risks appear in the years ahead.

Table: Core pillars of safe AI use in 2026

Learning how to use AI safely in 2026 is now as important as learning basic cybersecurity, because these tools touch almost every digital interaction you have.

Why AI Safety Matters So Much in 2026

By 2026, AI is deeply built into search engines, document editors, phones, browsers, and workplace platforms, often running quietly in the background. That convenience hides the reality that many tools process sensitive queries, documents, and behavior patterns, making how to use AI safely in 2026 essential for protecting privacy and reputation. When beginners understand the risks, they are less likely to treat AI like a harmless toy and more like a serious system that deserves respect.

Modern models generate long‑form text, code, realistic images, voices, and videos that are often indistinguishable from human work. This power can be used positively for education and creativity but also enables deepfakes, impersonation scams, and automated misinformation if misused. People who know how to use AI tools safely in 2026 learn to question spectacular outputs, verify sources, and avoid amplifying content just because it “looks real.”

Governments, standards organizations, and large tech companies are responding with new AI ethics frameworks and safety guidelines. Groups like UNESCO, ISO, and national AI missions emphasize transparency, accountability, and human oversight as mandatory elements of responsible use, not optional extras. When individuals invest time in ethical AI tips for beginners, they stay aligned with this emerging global direction and reduce the risk of compliance or trust issues later.

Table: Key dimensions of AI safety in 2026

When you clearly understand how to use AI safely in 2026, you are not just following rules; you are building long‑term trust with your audience, customers, or community.

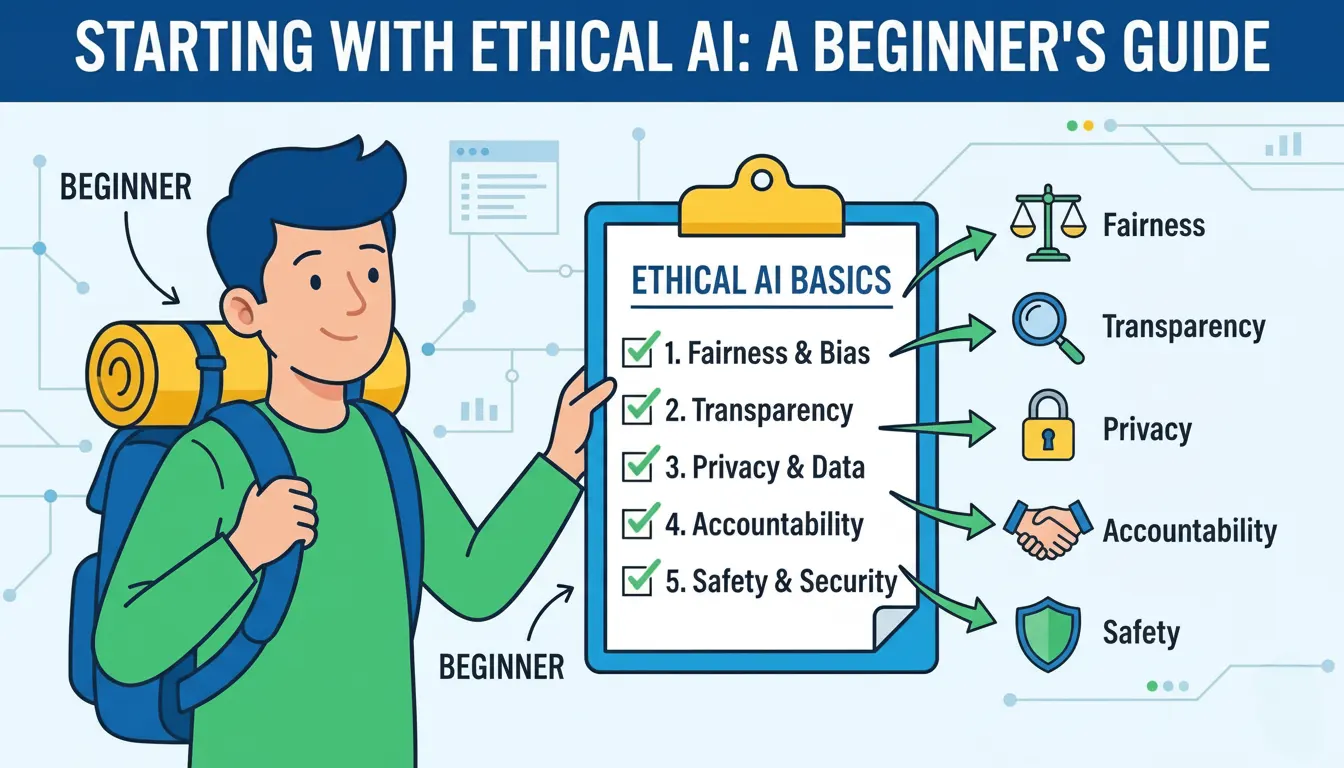

Ethical AI Tips for Beginners in 2026

Ethical AI tips for beginners are about behavior in real situations: which data you share, how you frame prompts, when you disclose AI use, and when you say “no” to unsafe ideas. Many newcomers focus only on speed — faster blogs, faster code, faster designs — but ignore fairness, privacy, and honesty, which are essential to how to use AI safely in 2026. Starting with a few simple rules makes responsible behavior feel natural instead of burdensome.

A critical habit is checking where information comes from and where it goes. If a model generates legal, medical, or financial content, that text should be treated as a draft, not a final answer, until confirmed against trusted external sources. Similarly, because many tools store prompts to improve models, users should never paste passwords, identity numbers, or client secrets into public AI interfaces.

Honesty about AI assistance is another core ethical principle. If AI helped draft part of an article, outline, or script, noting that clearly in a footer, caption, or credits section makes work more transparent and avoids misleading people about authorship. Ethical intent also includes avoiding prompts that chase harmful, hateful, or illegal content, even “just to see what happens,” because this behavior encourages misuse and undermines broader efforts to keep AI safe.

Table: Practical ethical AI tips for beginners

These beginner‑friendly habits make how to use AI safely in 2026 feel simple and repeatable, even if you are just starting with AI tools for work or study.

How to Use AI Tools Safely in 2026 (Step‑by‑Step)

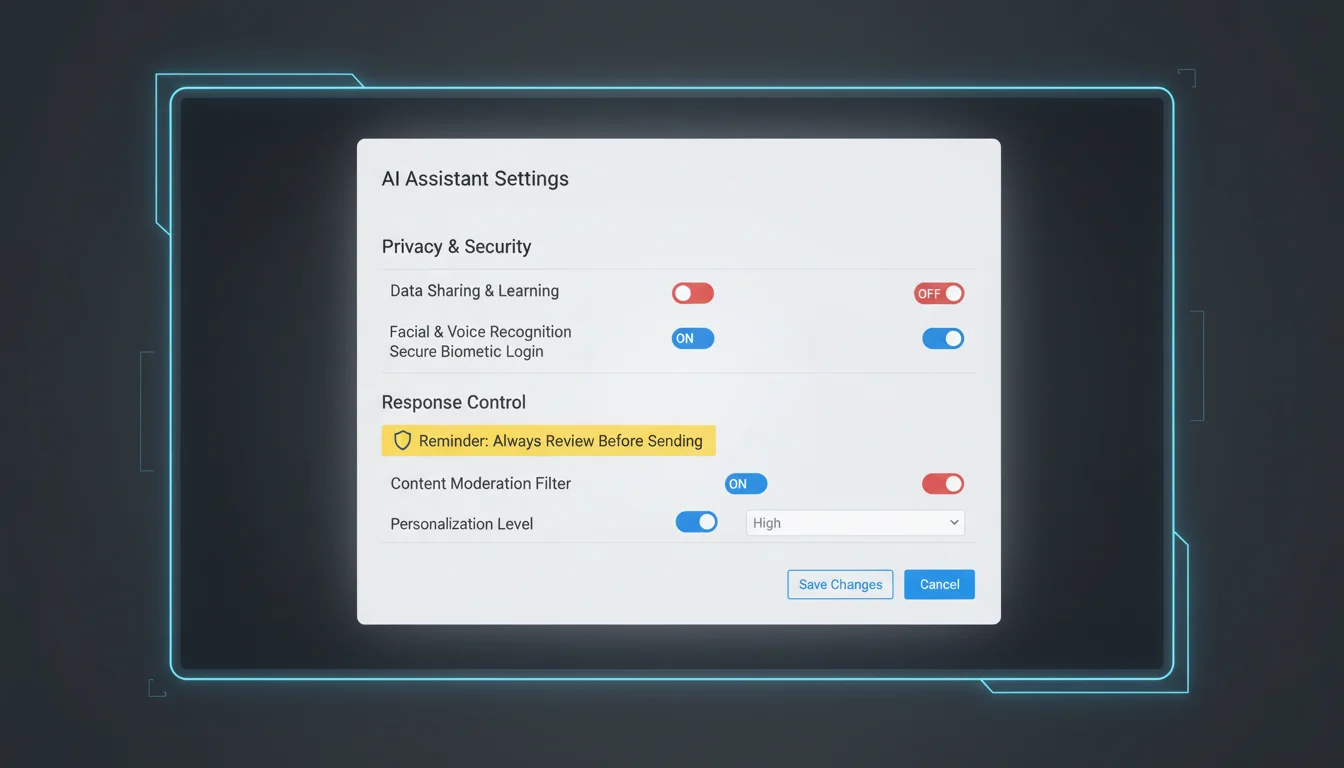

Safe AI use begins long before any prompt is typed; it starts with choosing platforms that respect users and comply with security and privacy expectations. Before registering, a quick check of privacy policies, data‑processing explanations, and security pages reveals how seriously a vendor treats safety. Services that clearly describe encryption, retention periods, and business‑grade options usually make a better foundation for how to use AI safely in 2026 than vague or opaque tools.

After selecting tools, account security becomes the second line of defense. Strong, unique passwords and multi‑factor authentication prevent unauthorized access to chat histories, uploaded files, and integrations tied to other cloud systems. This is especially essential when AI tools connect to email, storage, CRM, or internal documentation, because one breached account can expose many other services.

During daily use, prompt design and output review are where how to use AI tools safely in 2026 becomes concrete. Good prompts avoid personal identifiers, sensitive business data, and requests for unsafe or illegal content. Any AI output that can impact people — recommendations, summaries about real individuals, or content addressing health, money, or law — should be cross‑checked with reliable sources or domain experts before being treated as accurate.

Table: Safe AI usage workflow in 2026

If you follow this workflow every day, how to use AI safely in 2026 stops being a theory and becomes a practical process you can rely on.

Common Risks of Unsafe AI Usage (and How to Avoid Them)

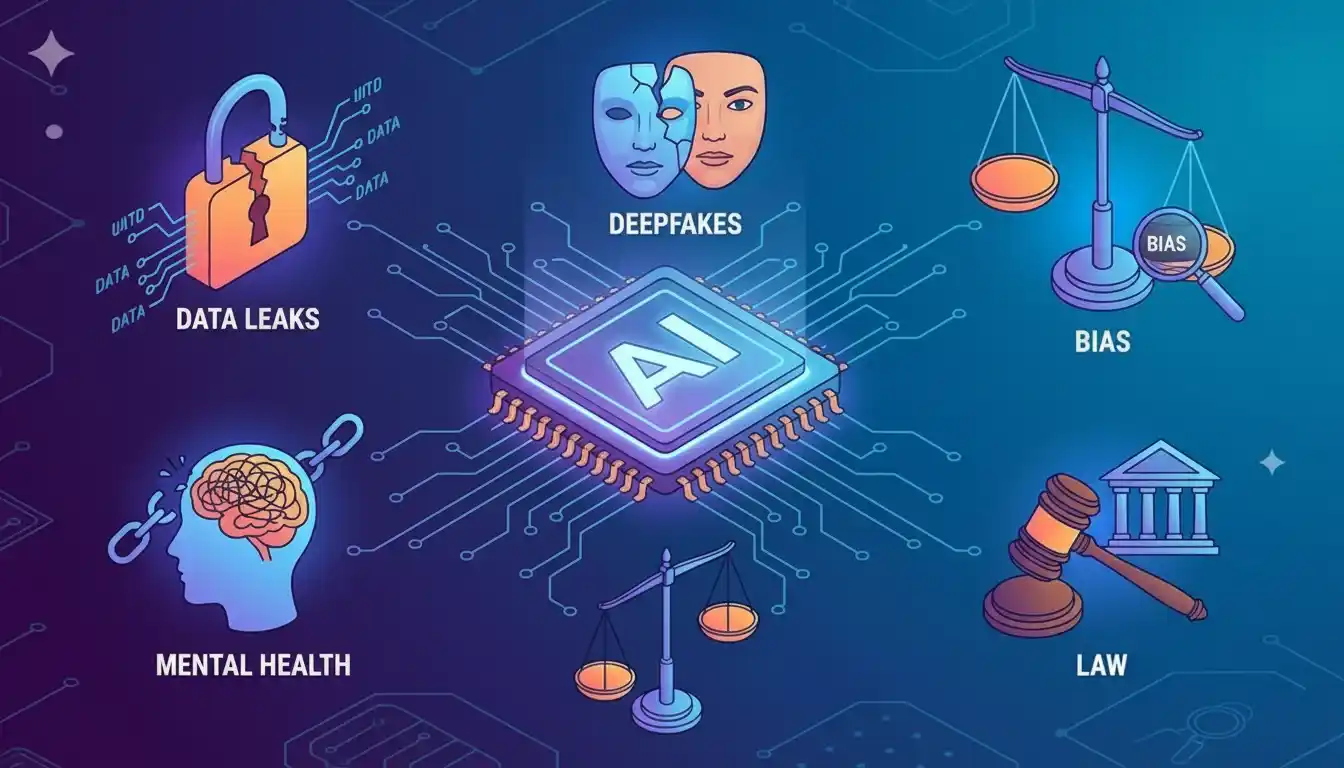

Even without malicious intent, careless AI use can lead to real damage. A single prompt containing confidential data, a fake image shared as real, or unreviewed AI text published on a brand channel can all trigger legal, financial, or reputational fallout. Knowing these risks is part of how to use AI safely in 2026, because awareness allows users to install safeguards before problems appear.

Data leakage remains one of the most serious concerns. Public AI tools may log prompts and attachments for model improvement, so sensitive content pasted into the interface can end up stored on external servers beyond your direct control. Professionals in law, healthcare, finance, HR, and government must be especially cautious, as many operate under strict data‑protection and confidentiality laws.

Another key risk is the role of AI in misinformation, scams, and manipulation. Generative models can produce convincing fake text, voices, or videos that attackers use for phishing, fraud, or propaganda. Over‑reliance on these tools for emotional support or life decisions can also weaken critical thinking and resilience, which is why how to use AI safely in 2026 includes mental balance and not just technical hygiene.

Table: Major AI risks and mitigation strategies

Building an Ethical AI Routine for Everyday Life

The most reliable way to maintain safety long term is to turn good practices into habits. Instead of treating guidelines as one‑time instructions, think of how to use AI safely in 2026 as part of your ongoing digital hygiene, alongside patching software, using password managers, and avoiding suspicious links. A simple routine makes it easier for beginners and teams to stay consistent without needing to memorize complex rules.

Start with an intent check each time you open an AI tool: are you brainstorming, summarizing, drafting, analyzing, or deciding? This helps separate tasks where AI is a good fit from tasks where human judgment must stay primary, like final approvals in finance, health, or interpersonal matters. Then add a verification step: any AI‑generated claim or recommendation that could affect other people should be checked quickly against trusted sources or human experts before action.

Continuous learning is the final pillar of an ethical routine. AI capabilities, privacy laws, and best practices change rapidly, with new patterns, threats, and safeguards emerging every year. Reading or watching one short update per week from reliable organizations keeps your understanding fresh and aligns your habits with the latest thinking on how to use AI tools safely in 2026 and beyond.

Table: Everyday ethical AI routine

Over time, this routine hard‑codes how to use AI safely in 2026 into your normal digital habits so you do not have to think about every small decision.

Daily Safe AI Checklist (Quick Reference)

A compact checklist gives you a fast reminder of what to do before and after using AI each day. Beginners and small teams can pin this near their workspace or in internal docs to keep everyone aligned without heavy policy documents. It condenses much of how to use AI safely in 2026 into five quick checks that cover data, tools, outputs, oversight, and compliance.

Table: Daily safe AI checklist

Final Thoughts: Human‑Centric AI in 2026 and Beyond

In 2026, AI is powerful enough to influence economies, education, communication, and personal choices, but it is still humans who decide how that power is applied. Mastering how to use AI safely in 2026 is about pairing curiosity with caution and speed with responsibility so that new tools genuinely improve life rather than complicate it.

When individuals and teams adopt ethical AI tips for beginners, choose responsible tools, and follow practical routines, AI becomes a trustworthy collaborator instead of a threat. This human‑centric approach keeps fairness, transparency, and respect for rights at the center of AI adoption, which is vital in a world filled with synthetic content and automated decisions.

Anyone who takes time to learn how to use AI safely in 2026 today will be far more confident and resilient as AI becomes even more integrated into everyday life. Follow AI Tech Unboxed for more insights, guides, and real‑world examples on building a future where AI remains safe, ethical, and truly human‑aligned.

FAQs on How to Use AI Safely in 2026

1. What does it mean to use AI safely in 2026?

Using AI safely means choosing trustworthy tools, protecting sensitive data, avoiding harmful prompts, and always applying human review before trusting important outputs.

2. Are AI tools safe for beginners to use?

Yes, AI tools can be safe for beginners if they avoid sharing private information, verify key facts, and follow basic ethical guidelines instead of trying to bypass system safeguards.

3. How can small businesses manage AI risks?

Small businesses can create simple internal rules, select reputable vendors, train staff on data privacy, and regularly review high‑impact AI use cases for error or bias.

4. Can AI outputs be trusted without checking?

No. Even advanced models can make mistakes or generate biased content, so critical AI outputs should always be checked against reliable sources or domain experts before use.

5. How do I keep up with changing AI safety rules?

Follow trusted organizations, standards bodies, and national AI initiatives that publish AI and privacy updates, and review your own practices at least once or twice a year.