Whisper Transcription: The Human-Centric Revolution in Speech-to-Text (2025)

Introduction: Why Whisper Transcription Is Changing Everything

The way we interact with technology has always been shaped by language, but never before has spoken language merged so seamlessly with the digital world as it does today. Enter Whisper Transcription, OpenAI’s landmark achievement in Speech-to-Text intelligence for 2025. If you’ve ever struggled with inaccurate transcripts, missed nuances, or the confusion of muddled voice notes, you’ll understand that accuracy in speech recognition isn’t just a convenience—it’s a necessity. Whisper Transcription is set to redefine how we capture, process, and share spoken information globally, empowering creators, businesses, educators, and developers alike.

At first glance, Whisper might seem like just another Speech-to-Text system in a crowded field. But as you dig deeper, you discover a story of AI built on the broadest datasets ever seen, trained on millions of hours of real-world speech across dozens of languages and environments. This is AI that adapts. Whether you’re transcribing a noisy interview, translating a podcast in real time, or auto-captioning a course for international students, Whisper’s blend of flexibility, precision, and inclusiveness makes it a game changer.

Whisper doesn’t just recognize words—it understands context. It can differentiate between technical jargon and everyday speech, manage background sounds, and identify intent with remarkable fluency. This means more than just high-quality transcripts: it means accessibility for those with hearing disabilities, faster searchability for content creators, productivity for enterprises, and knowledge democratization worldwide.

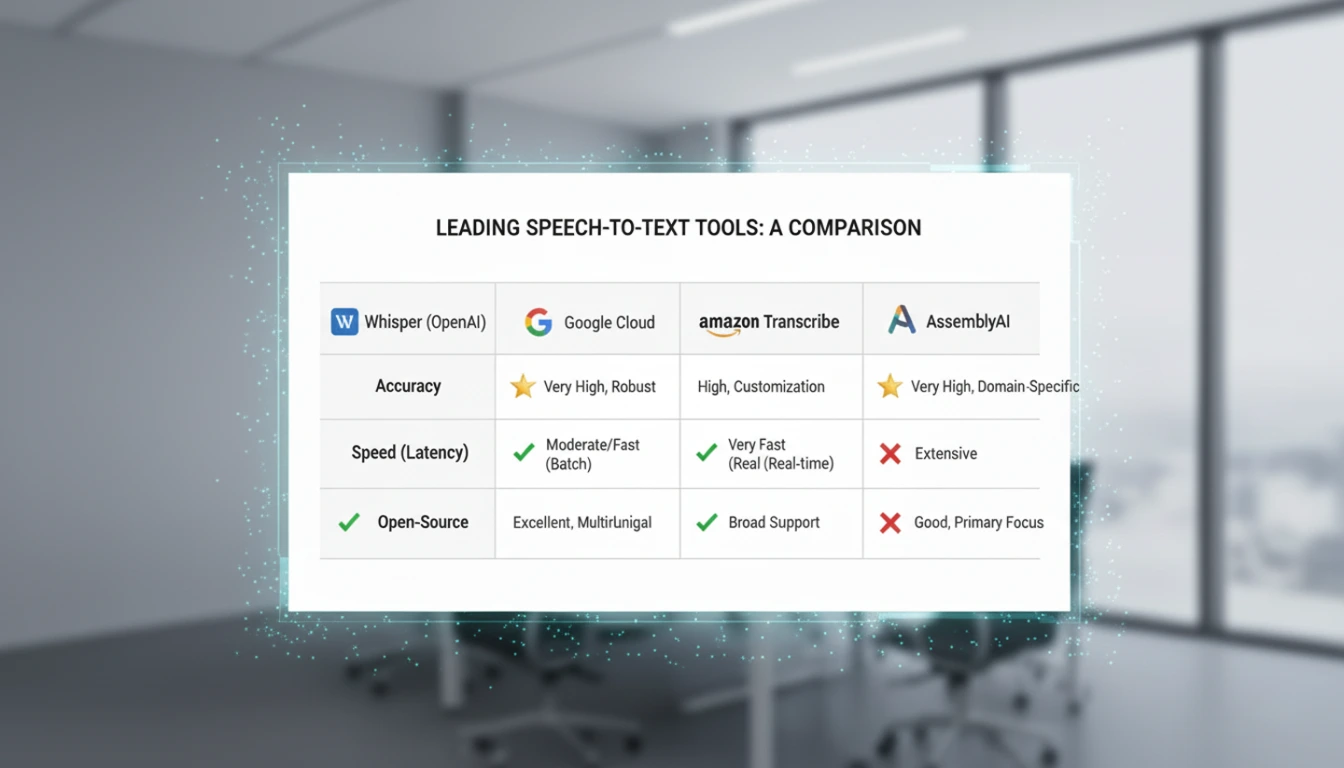

What separates Whisper Transcription from its competitors isn’t only its multilayered AI, but the enthusiastic embrace from a global open-source community. Developers and businesses are building faster, smarter, and cheaper workflows by integrating Whisper into tools from YouTube captioning to virtual assistants. In a world where every second counts, automating accurate Speech-to-Text is the leap forward we all needed.

The next time you press record on your phone, remember: the technology turning your voice into a written memory may be powered by Whisper, forever changing the way we convert speech into action.

Whisper’s Technological Advantage: Why It Dominates Speech-to-Text

Traditional Speech-to-Text tools have always had their limits. From spotty transcription accuracy and poor accent recognition to clunky integrations, creators and professionals alike have yearned for something better. Whisper Transcription’s secret lies in its backbone—a transformer neural network architecture specialized in extracting not just words, but meaning, tone, and emotion from the spoken word.

The real magic of Whisper is its training. Unlike earlier models, Whisper learned from a colossal, multilingual speech dataset sourced from publicly available audio, podcasts, lectures, interviews, and user-generated content. This allows it to process regional dialects, technical terms, and noisy environments with a remarkably human touch. Even if you switch mid-sentence from English to Spanish, or ask it to transcribe a science lecture with dense terminology, Whisper adapts in real-time.

Moreover, Whisper prioritizes real-time transcription. Businesses dealing in customer service, law, healthcare, and content production benefit greatly—meetings and interviews are recorded and transcribed on the fly, saving valuable time and reducing manual labor. The model isn’t just accurate; it’s lightning fast, making it suitable for applications like live broadcasts, court reporting, and instant messaging transcription.

What really sets Whisper apart for developers and businesses is its open-source nature. You can access, fine-tune, and customize the transcription pipeline for virtually any industry use case, ensuring privacy, compliance, and cost efficiency. For example, sensitive legal or medical content never has to leave your local device—an option often impossible with closed, cloud-based competitors.

As of 2025, Whisper has integrated new diffusion transformer layers, boosting transcription reliability even in chaotic acoustic environments. It also supports over 90 languages, opening doors for global communication that previously required expensive human interpreters. This is true accessibility—AI that listens, thinks, and responds for everyone.

At the core of Whisper Transcription’s unmatched accuracy is its ability to “learn on the go.” Through ongoing training using diverse, real-world conversations, the underlying AI not only adapts to slang or idioms but also remembers patterns that human transcribers often miss. One example: call center recordings rife with overlapping chatter or fluctuating signal strength. Where legacy STT systems would fail, Whisper segments the audio, isolates speaker voices, detects sentiment changes, and even identifies named entities—turning chaos into clarity.

Whisper’s adaptive intelligence also means it can spot errors in context. For instance, if a podcast guest mispronounces a scientific term, Whisper uses nearby words and topic modeling to infer the right phrase, flagging anomalies for easy review. In legal applications, where every word matters, this becomes invaluable for maintaining transcript integrity—even with jargon-heavy cross-examinations or unclear audio.

The community’s embrace of Whisper’s open AI stack has driven innovation further. Healthcare developers train custom Whisper models to handle thick regional or non-native English accents, leading to superior dictation accuracy for clinicians. Entertainment companies have created auto-captioning services for live esports and gaming streams with ultra-fast latency—something unthinkable for consumer-level STT just a few years ago.

No less impressive, Whisper is “always in school.” User refinements, open pull requests, and live application feedback are poured back into future model releases. This feedback loop has made Whisper not only more accurate, but also more “human,” as it continues to adapt to the ever-changing way people worldwide speak, emote, and communicate.

Real-World Impact: Applications of Whisper Transcription Across Industries

Imagine if every meeting, interview, or brainstorming session you attended could be instantly transcribed and indexed—searchable, shareable, and easily stored forever. That’s the reality Whisper Transcription brings, and its impact is already being felt across industries.

In media and podcasting, journalists now rely on Whisper to transcribe interviews—even those conducted in bustling cafes or with poor audio equipment. Audio creators, YouTubers, and video editors deploy Whisper-powered plugins for instant captioning and subtitle generation in diverse languages. The difference isn’t just speed—it’s authenticity. Listeners no longer have to worry about context or sentiment being lost in translation. What is said is captured, context and all.

The education sector is experiencing a leap in accessibility. Professors use Whisper to convert lectures into crisp, indexed notes; international students benefit from real-time multilingual captions; and students with disabilities or neurodivergence now enjoy equal access to information. Hybrid and remote learning, previously fraught with technological barriers, become smooth and inclusive thanks to advanced, always-reliable Speech-to-Text.

Even in healthcare and law, Whisper is breaking barriers. Doctors record patient notes during consultations without worrying about privacy leaks—since Whisper can run locally on secure devices. Lawyers transcribe depositions automatically, improving accuracy while saving time (and costly paralegal hours). In business, every virtual call, client session, or brainstorming can generate actionable, error-free transcripts for future reference.

Companies scaling in multilingual markets find Whisper indispensable. From cross-border team meetings to training resources and customer support, language is no longer a barrier. The AI ingeniously detects code-switching between languages, allowing enterprises to thrive in international landscapes.

This is not just productivity—it’s life-changing accessibility, knowledge democratization, and new creative horizons for millions.

Let’s zoom in on some of the highest-impact field stories:

Education:

A major university in South America integrated Whisper Transcription into all online learning modules, boosting accessibility for students who couldn’t attend live sessions due to bandwidth issues. Professors simply uploaded their lecture recordings; within minutes, complete transcripts—accurate and readable in multiple languages—became available for all. The result wasn’t just higher grades; it was broader participation from underserved students who, for the first time, felt fully included in the digital classroom.

Healthcare:

A busy urban hospital replaced manual scribing with Whisper-driven dictation apps for both English and Spanish consultations. Doctors saved half an hour per shift (per person), translating to thousands of productive hours gained each year. Whisper’s privacy-centric, on-device setup also satisfied strict patient confidentiality regulations, delighting IT security teams and clinicians alike.

Media & Journalism:

A global news network started transcribing video interviews with field reporters using Whisper, slashing editing bottlenecks and delivering tightly subtitled international stories within hours—rather than days. Context-aware transcription also meant fewer “inaudible” notations and greater editorial confidence.

Business Operations:

International teams on fast-paced Zoom calls used Whisper to generate real-time meeting transcripts in both native and secondary languages. Sales, support, and training decks became instantly searchable, dramatically improving follow-up and knowledge-sharing.

Public Sector:

City councils and local governments seeking transparency began producing Whisper-powered, open-access transcripts of public meetings. Community trust soared as citizens accessed debates and policy explanations with accuracy and multi-language support previously unavailable from government websites.

Each case proves a simple truth: when machines finally understand us, we unlock new potential for efficiency, inclusion, and creativity in every sector.

Technical Foundations: How Whisper Delivers Human-Like Speech-to-Text

The magic inside Whisper Transcription comes from its unique technical architecture—engineered to mimic how humans actually listen, learn, and understand language. Drawing from state-of-the-art transformer neural networks, Whisper’s core model processes speech using multi-headed attention mechanisms, giving it the power to learn meaning, context, and subtlety from incoming audio.

Most “legacy” Speech-to-Text tools rely on smaller, domain-specific datasets. Whisper, on the other hand, was trained on a communal, open-source dataset that spanned podcasts, audiobooks, interviews, lectures, and more—ranging from crystal-clear studio environments to bustling street audio. This variety makes Whisper uniquely robust: whether you’re a content creator recording in a noisy hotel or an educator capturing a massive auditorium, the model adapts effortlessly.

Speed and efficiency are also a hallmark of the Whisper architecture. Thanks to the recently implemented diffusion transformer layers, parallel processing, and advanced error correction, Whisper can handle long-form transcription and noisy audio sources in a fraction of the time it took previous models. Developers can now deploy it in resource-limited environments (like mobile devices) or as powerful backbone engines in cloud workflows.

Open-source accessibility ensures Whisper remains privacy-friendly and highly customizable. Enterprises in sensitive fields can tweak or fine-tune the model for industry-specific needs without sending confidential audio to external servers. This keeps regulatory and security teams happy without sacrificing the seamless Speech-to-Text experience.

Finally, the future of Whisper isn’t just about raw transcription. The roadmap includes emotion detection, intent analysis, and even “context memory”—paving the path for voice-to-data solutions that actually “understand,” not just “hear.”

Whisper’s power is magnified by how easy it is for developers to adapt and extend. The open-source repository includes numerous APIs, integration samples, and ready-to-use container deployments for every major operating system. That means whether you’re building a custom podcasting workflow, a hands-free medical dictation app, or a global customer support dashboard, Whisper adapts to your ecosystem, not the other way around.

For workflow engineers, this means unprecedented flexibility. Want speech diarization (dividing transcripts by speaker)? Easy. Need sentiment tags, translation, or entity detection? Many plugins and extension scripts build on top of Whisper’s base architecture. Tuning for domain-specific vocabulary—like law, healthcare, or technical training—lets organizations deploy bespoke STT models that drastically reduce post-editing.

The community’s appetite for innovation has also led to advanced features like “real-time AI translation” and “multimodal AI,” where Whisper transcripts integrate with visual analytics (think: matching a lecture’s spoken transcript to the professor’s slides). This creates new frontiers in accessibility tech, from AR captioning in smart glasses to language tutoring robots that transcribe and coach on pronunciation in real time.

OpenAI’s regular updates and active global forums mean developers have support and roadmap transparency. This energizes small startups and solo creators—a crucial factor in balancing the field against bigger, proprietary tech conglomerates.

Challenges, Ethics, and the Road Ahead for Whisper Transcription

Like all disruptive technologies, even brilliant solutions like Whisper Transcription aren’t immune to challenges. As powerful as Speech-to-Text AI has become, even the best models face hurdles such as accent ambiguity, low-quality audio, overlapping speech, and code-switching between less common languages. Developers and users alike must remain aware that, though Whisper is industry-leading, no system is totally infallible.

One frequently discussed issue is “AI hallucination,” where the transcription engine invents plausible-sounding text for unclear audio. Though rare with Whisper (thanks to vast dataset exposure and real-time error correction), these occurrences can lead to subtle misinformation in legal, medical, or journalistic settings. Proactive human review and continuous dataset updates remain crucial to keeping standards high.

Ethical considerations run deeper still. Automated Speech-to-Text touches on critical areas like privacy, consent, and data ownership. Whisper’s open-source model greatly reduces cloud-based data exposure, giving users more autonomy—but it’s still vital for organizations to inform involved parties whenever recordings or real-time transcriptions are happening. Regulatory transparency is non-negotiable.

Another evolving challenge is ensuring balanced representation in training data. AI models need diverse inputs to accurately transcribe minority dialects, underrepresented languages, and unique speech patterns. The open-source community around Whisper actively expands these datasets, striving to make Speech-to-Text universally unbiased and truly global.

Best practice is now to default to explicit, opt-in consent before using voice data for any automated transcription, especially in multi-party settings (recorded meetings, interviews, etc.). Organizations must deploy clear policies and easy-to-use toggles, giving users full choice over participation and how their speech is stored. Whisper’s documentation includes detailed implementation guides for compliance in US HIPAA, EU GDPR, and other legal environments—helping organizations avoid costly data mishandling.

On the social side, AI developers must constantly test for “accent privilege”—bias where certain dialects are transcribed better than others—and rebalance training data accordingly. This points toward a future where big data is paired with ethical, inclusive oversight—making sure Whisper keeps empowering, rather than excluding, unheard voices globally.

The public’s growing AI literacy, combined with real-world data regulation, will determine whether Speech-to-Text remains a force for good, or falls prey to the pitfalls seen in earlier waves of technology.

Yet, facing these hurdles inspires rapid innovation. From building advanced detection for code-switching to designing easily understood consent protocols, the evolving support network around Whisper is positioned to change not just tech but society for the better.

Final Thoughts: Whisper Transcription and the AI Tech Unboxed Future

As we look toward a world driven by real-time voice data, Whisper Transcription stands as a beacon for the next chapter of digital communication. Never before has automation in Speech-to-Text so closely mirrored the nuanced, round-the-clock listening of a skilled human—not just in one language or accent, but across borders and industries.

For creators, educators, and businesses, Whisper brings a genuine sense of empowerment. It liberates time, reduces costs, and ensures that what you say is never lost. For accessibility advocates, it breaks barriers—ensuring that every voice can be heard, stored, and remembered. And for developers, its open-source freedom fuels endless innovation, collaboration, and possibility.

If you’re seeking to harness the power of your audio, automate daily workflows, or simply want the most accurate transcription solution available in 2025, Whisper is not just the future—it’s the present, ready for you now.

Looking to the future, there’s no slowing down. Developers are already beta-testing interactive voice assistants powered by Whisper, capable of parsing not just speech, but user mood and level of urgency. In media and language learning, researchers are building adaptive closed-captioning for AR/VR headsets, enabling fully immersive real-time language translation.

Expect to see more contextually intelligent tools—like project management bots that can track meeting actions directly from live audio or “note-to-knowledge” flows where Whisper transcripts are automatically summarized, tagged for deadlines, or linked to tasks in productivity apps.

The next generation of Whisper AI is likely to introduce features such as “accent-adaptive” models that tune themselves based on user feedback, instant emotion or intent tagging for marketers, and multilingual “storyteller” bots that weave spoken recordings into audio books or blog posts, automatically.

For every content creator, learner, or business leader, the message is clear: If you want to lead in a world where your voice is your most valuable asset, mastering AI tools like Whisper is the winning move.

Stay tuned to AI Tech Unboxed for the latest breakthroughs in AI, Speech-to-Text, and the tools that are defining the new era of intelligent communication.

FAQs on Whisper Transcription & Speech-to-Text

Q1: What is Whisper Transcription, and who created it?

Whisper Transcription is an advanced Speech-to-Text AI model developed by OpenAI, engineered for reliable, multilingual, and highly accurate voice-to-text conversion.

Q2: Is Whisper Transcription only useful for English speakers?

No—Whisper supports over 90 languages, making it one of the most inclusive AI transcription services available for creators, students, and businesses worldwide.

Q3: Can I use Whisper for sensitive or confidential audio files?

Yes, since Whisper is open source, it can be run locally—meaning your private audio never has to leave your secure device or environment, maintaining full privacy control.

Q4: How accurate is Whisper compared to traditional Speech-to-Text tech?

Whisper Transcription achieves 95%+ accuracy in ideal audio conditions, vastly outpacing many legacy STT systems due to its massive, diverse training dataset and advanced transformer models.

Q5: What are some unique use cases for Whisper Transcription in 2025?

Whisper powers live captions, video subtitling, meeting transcription, hands-free documentation, accessibility tools, and even voice-powered analytics—across nearly every industry you can imagine.